Midjourney Decoded: The Architect’s Companion to Visual Thinking with AI

The Ultimate Refresher on Midjourney. A brush-up, a breakdown, and a blueprint to use Midjourney like it was made for spatial design.

Remember When Midjourney Felt Like Magic?

Just a year ago, we were typing strange poetic combinations into a Discord chat and watching uncanny architecture materialise out of digital mist. Midjourney wasn't a tool; it was a spellbook.

But like all tools of transformation, magic eventually gets an upgrade.

With V7 and a suite of new features, Midjourney has matured into something more structured, more controllable, and more suited than ever for architects, interior designers, and spatial storytellers. This article is your reorientation: a guide for those who dabbled, wandered, or paused their Midjourney use and now want to come back sharper.

Whether you're ideating early sketches, creating mood studies, or exploring spatial narratives, Midjourney is no longer an aesthetic experiment. It's a visual reasoning engine. And importantly, it's not a rendering engine. It doesn't simulate optics; it interprets imagination.

From Discord to the Web: Midjourney’s Evolving Interface

Midjourney began on Discord, as command-line poetry in a chat room. Today, it has grown into a visual playground on the web, with tools that support every step of a designer’s thought process.

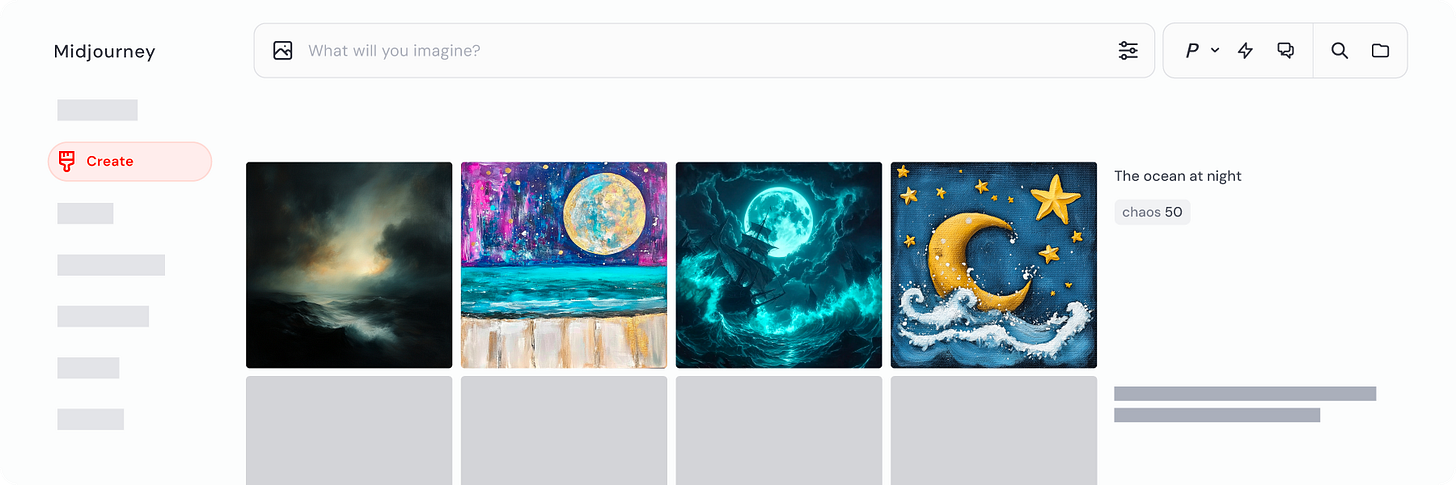

Create: Use the prompt bar, conversational mode, or even voice to generate images. Draft Mode lets you generate quicker iterations, while the Animate button converts stills into short cinematic clips.

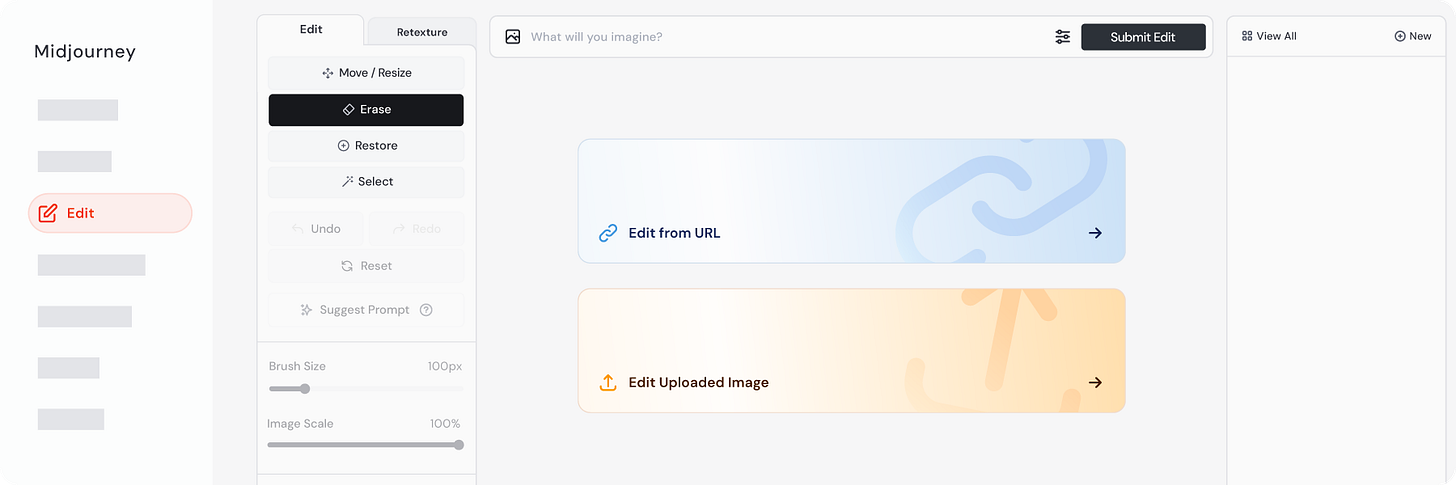

Edit: The integrated editor allows inpainting, object removal, and composition tweaks. You can also re-edit external images using the same tools.

Organise: Folders help you structure your creative chaos, sort generations by typology, phase, or client presentation.

Rooms: Private rooms allow solo experimentation or collaborative design sprints. Keep drafts confidential or run thematic explorations with your team.

It’s no longer just about beautiful images, it’s about building a process. One that aligns with how designers think, iterate, and evolve ideas.

I. Midjourney as a Design Companion

Midjourney doesn’t function like CAD. It doesn’t behave like a renderer. And it never will.

It was built to externalise thoughts that are abstract, metaphorical, or gestural. In other words: architectural sketching of the 21st century. It reads between the lines of your prompt, surfaces aesthetic tendencies, and lets you modulate them with precision.

Why spatial thinkers love it:

Camera-like controls: The

--ar(aspect ratio),--styleand--stylizeparameters let you simulate views, scale, and expression.Imagination externalised: It becomes the fastest way to prototype atmospheres, facades, or programmatic ideas.

Textural intuition: It handles materials like concrete, bamboo, glass, sandstone, or even surreal combinations with surprising literacy.

II. Versions Recap: MJ 5.2, 6, and the Rise of 7

Midjourney 5.2: Known for its soft lighting, cinematic tones, and poetic abstraction. Prompts were short, keyword-based, and stylised.

Midjourney 6: Marked a jump in detail retention and realism. More control over coherence, less “hallucination.”

Midjourney 7: Introduces personalisation, style reference upgrades, omni-referencing, and a much cleaner interface.

The good news? You can switch between them anytime.

III. Midjourney Basics: Commands and Parameters

Here's a foundational list for daily architectural and design use:

IV. The Upgrade: What’s New in V7

Midjourney V7 is more than an aesthetic leap—it’s a design logic shift.

Omni-Reference (--oref)

Place consistent characters, objects, or spatial features across images

Use

--ow(Omni-weight) to adjust the influence

Style Reference (--sref)

Use an image to lock in mood, lighting, and stylistic choices

Less subject leakage, more fidelity across multiple prompts

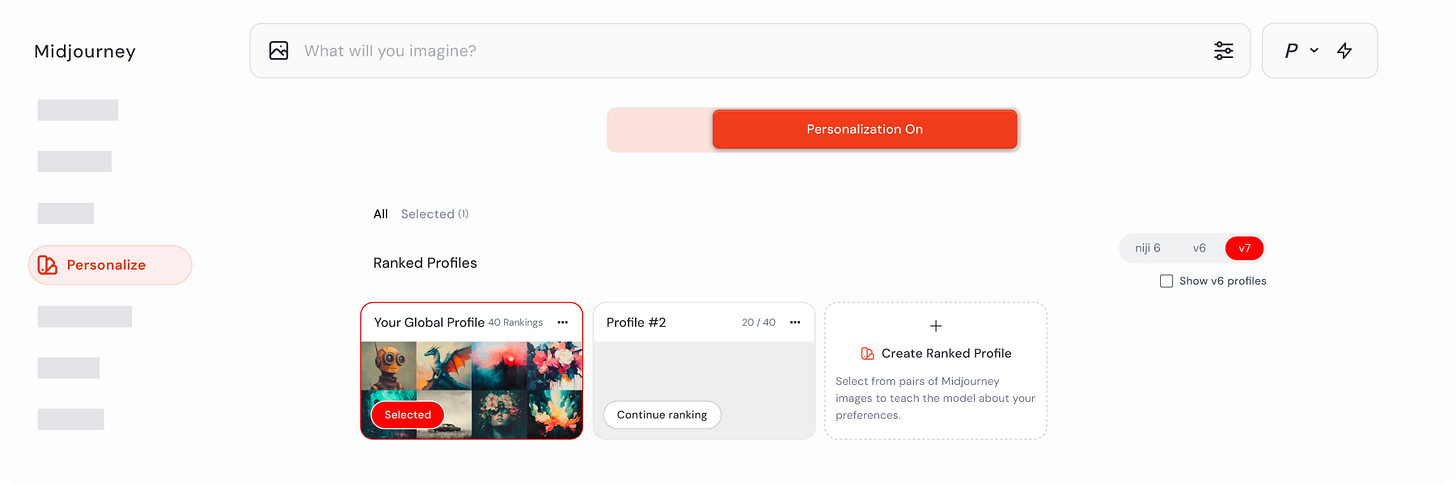

Personalisation Mode (--p)

Midjourney adapts to your style over time

Think of it as a studio intern that “learns your eye”

Video Generation V1

Midjourney now lets you animate your still images into 5 to 21-second videos—perfect for mood walkthroughs, spatial storytelling, or cinematic glimpses of product and fashion concepts.

Key highlights:

Available on the Web App only.

Start from any image (MJ-generated or external) and generate motion using Auto or Manual options.

Use motion low for subtle ambient movement, motion high for cinematic sweeps.

Extend videos in 4-second increments, up to 21 seconds total.

This is more than a gimmick, it’s a practical leap. Designers can now prototype atmosphere as motion. Think shadow behaviour, light movement, or context mood shifts, all from a single prompt.

V. Crafting Prompts: The Midjourney Way

Prompting in Midjourney isn’t about lengthy paragraphs. It’s about clarity, hierarchy, and rhythm. Think like a cinematographer, write like a designer.

Basic Structure:

Subject + Adjectives + Context or Material + Style cues + Parameters

Basic Prompt Template:

[Main Subject or Project Type], [Core Style or Visual Language], [Material Palette], [Lighting Type], [Perspective or View Type], [Mood or Atmosphere], [Time of Day], [Environment or Background Context], [Optional Design Elements or Details] --v [Version] --ar [Aspect Ratio] --stylize [Style Reference]

VI. Quick Start Blueprint (10-minute Midjourney Reset)

Join the Discord or use the Web App

Switch to Version 7 in /settings or prompt with

--v 7Upload a site photo and use it as an image prompt or omnireference, or generate an image.

Experiment with

--style rawfor realism and architectural honestyTry inpainting and zoom-out for narrative continuity

Play with

--srefand--pto develop a signature lookUse Remix or Vary Region for evolving iterations

Press 'Animate' on your favourite frame to get a mood video

Why This Still Matters

Midjourney taught us to see differently. To think like light. To sketch with mood. To construct the atmosphere before form. And now, with V7, we don’t just make images faster, we articulate intent better.

Midjourney is not a render engine. It's a sketchbook for the intangible. A design ally that listens. If you haven’t used it in a while, consider this your return invitation. If you have, then it’s time to go deeper.

Want to go deeper?

Explore the full potential of AI-driven visual thinking in our 330-page hardcover

Subscribe for more weekly explorations into how AI reshapes design intelligence.

Want to meet up? Here’s where we are headed this week!

I’m gonna be speaking at the Transformations 2025 conference organised by the Council of Architecture with the DY Patil School of Architecture in Navi Mumbai on the 27th of June 2025. Don’t hesitate to come up and talk!

Next, I’m gonna be speaking at my home ground in Hubli, thanks to the wonderful folks at the IIID Hubli-Dharwad Centre, at their Ctrl+Shift+Design event in Hotel Naveen Hubli, on the 28th of June 2025. Come say hi if you’re around!

I’m Sahil Tanveer of the RBDSai Lab, signing off for the week. I promote, consult, and apply AI for Architects along with my Architecture and Design Studio, RBDS. If you liked this Substack,

You will love my book, DELIRIOUS ARCHITECTURE: Midjourney for Architects. It is a 330-page hardcover showcasing the potential of AI in Architectural Design. It is available on Amazon worldwide.

You can bend your minds with our WhatsApp channel AI IN ARCHITECTURE where we talk about AI and its impact on us and the built environment.

You can consult with us on AI for your architecture studio. We have multiple levels of learning and integration, from a Beginners session to the AIMM Assessment and beyond. Get in touch with us at sahil@rbdsailab.com or check out our page www.rbdsailab.com

I’m talking about AI. Our team is set to visit key cities of India for architectural conferences, Podcasts and exclusive student interactions at architecture schools. We’d love to come over for an engaging meetup, hands-on workshop, or a creative collab. Enquiries to sahil@rbdsailab.com